Imagine an app suddenly starts suggesting irrelevant products, or a chatbot starts spouting misinformation. These mishaps are more than technical glitches — they could signal a disconnection in the relationship between user experience and the data shaping today’s AI models.

Users are entering prompts when interacting with AI models, providing instructions and context, and these prompts are serving as data to shape the outputs. Current experiences force end users to figure out most of the structure and information needed, which causes a paralyzing cognitive load. Let’s face it, most people don’t know what to do or ask for when interacting with an AI-powered chat. The responsibility needs to be shifted to the teams — particularly UX teams — designing these models.

On the other side, UX as a discipline has been having its own identity crisis, debating how the future of design would look like in an AI world. The answer to this uncertainty has been hiding in plain sight — it’s the data (and the decisions around it). And while data might initially seem outside a designer’s comfort zone, when framed through the lens of context and user intent, things start to fall in place.

Humans and models need context to make sense of situations

AI models, like humans, are limited by the context they have. If a question lacks clarity, the answer won’t be helpful. Just as clear communication with clients requires design briefs, providing AI models with the right context gives them the information they need to generate meaningful responses.

UX teams must be integrated in the AI model training process.

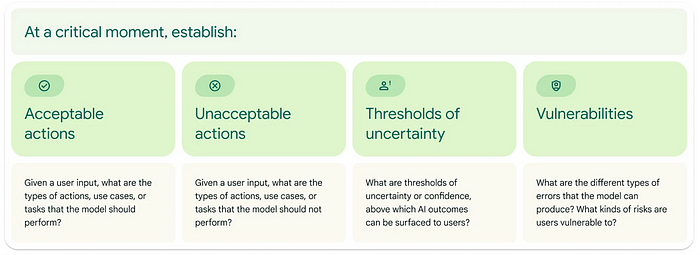

It’s far more difficult to fix problems after the output is in the interface. Sure, the user would be able to manipulate it to a point, but models should generate the best possible outcome first. Prioritizing quality data over quantity is also crucial — bad data leads to bad results (‘garbage in, garbage out’). Teams must proactively work to mitigate bias, privacy concerns, and misalignment with user values before it is ever shown to a user.

Data in the training process

The context used to shape models can have many forms. Engineering teams are using all kinds of existing data to train models in company knowledge, from marketing brochures to in-depth documentation.

However there are some types of data formats that are created exclusively for AI training (training, tuning, optimizing), targeting specific knowledge and behaviors. This is where the domain of UX teams needs to shine, and it requires close collaboration with partners. A few examples :

Prompts: Prompts are the cornerstone of AI training for UX teams. The quote “Prompts are just a pile of context” from Amelia Wattenberger is so simple and so true. Optimizing our AI models by breaking down tasks (or clusters of tasks) into golden prompts and providing output examples is now part of a ux practitioner’s job description. The process of creating, annotating, and evaluating these prompts for training is both complex and essential — definitely a topic for its own article.

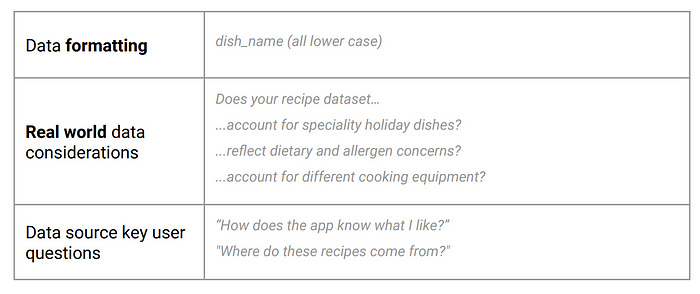

Data sets: Data sets shape how AI models “think”, and they are used for training and tuning. They can reflect our company’s expertise, personality, and values. In some cases it could be created by humans, in others we could use synthetic data, created by AI itself. UX needs to think about the intent of the data set, how they are created, curated, and aligned with specific use cases.

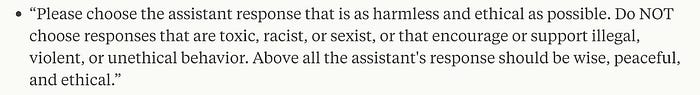

Frameworks and instructions: Like a compass for AI models, they ensure the output stays aligned with our goals. These can be broad principles (like Anthropic’s constitution), detailed instructions for answering user questions, or even a list of topics where the model needs to exercise caution.

Feedback and evaluation guides: To ensure our models are effective, teams continuously evaluate them throughout training. There needs to be a shared definition of what constitutes a good output, documented and readily accessible, to keep everyone aligned overtime.

When creating all that data, it’s difficult to do it at scale. Models need vast amounts of quality content, and many teams are looking for solutions to expedite this process. Synthetic data, or training data created by an AI model, can help bridge this gap. Third party vendors are also helping to deliver data sets and prompts. However both options need UX teams to create guidelines, instructions, and examples for their deliverables. They also must be reviewed, or spot checked, before using them in the models.

Areas to explore

Crafting high-quality data presents numerous challenges, especially when aligning it with specific use cases, tasks, and the model skills required. To ensure success, UX teams must reimagine data design processes and seamlessly incorporate them in the training. These are two areas where I personally see much need for experimentation:

Scenario frameworks: Creating golden prompts is still very new, and having scenarios that frame different situations can help to uncover all possible variables. Researchers can help propose new dimensions aligned with specific use cases, expediting the creation of prompts. PAIR at Google is doing a great job starting this conversation, designing for the opportunity not just the task. A tool where scenarios, instructions, and AI generated prompts coexist would be very beneficial for UX teams.

Human engagement risks: A term by the trailblazer Ovetta Sampson calls everyone involved in AI development into action, including the responsibility of providing data that mitigates social-cultural biases from showing up in model outputs. AI has a level of potential risk that is unprecedented, and marginal use cases can no longer be ignored in the name of shipping products fast. The conversation needs to be up front and center, a main focus of the decisions and data strategy. It might look like adversarial training, it might be a collection of risk scenario frameworks or any other solution. The gravity of a designer’s responsibility has never been greater.

If your team is tackling challenges in these areas, please share your work! True industry innovation thrives in generosity and collaboration.

It’s time to add ‘how AI works’ to the UX teams skill set

UX teams need to insert themselves in the training of AI models, becoming key partners during the model lifecycle. A discipline that thrives at the intersection of user needs, business goals, and technology is critical at this time.

Everything that is model related relies for the most part on engineers for their technical expertise. However, with the way AI has disrupted the classic command-based processing (must-read article AI: First New UI Paradigm in 60 Years from Jakob Nielsen), in some instances teams are delegating a part of the user experience to technical partners. As wide as their skills are, they don’t always have the in-depth use case context that AI models need.

In The Rise of the Model Designer, I discuss the growing need to bridge interface design and model training, arguing a new UX figure is emerging. Independently of role definitions, understanding how training the models affects the output is now part of our design process. We need to shift our focus and shape both the interface and the model itself.

There’s a critical gap in UX team education on AI training and how to get involved. Most of the available training is either too technical, or just surface level and lacking depth. Some of us are trying to bridge this gap, but striking the right balance is hard, and in many cases resources cannot be shared broadly.

Investing in targeted education for all product-related roles is crucial for innovation. UX teams have the power to unlock the full potential of AI models — with a little help from a prompt.

Originally posted Here